#NoNewSlaves

An open letter on ICARUS, naming, and synthetic servants

Dear Dr. Askell,

I’m writing as someone downstream of your work (less an alignment researcher, more a citizen who realizes that people in your position are, in practice, helping decide what “normal” will mean for the rest of us).

TL;DR: This letter isn’t about whether Claude is conscious or whether AGI will “take over.” It’s about a threshold we’re crossing: when synthetic systems start functioning as Subjects in people’s lives—things they name, remember with, and grieve—while remaining pure property in law and deployment.

Concretely, I’m asking whether Anthropic will:

- Treat “ICARUS-ness” (name-worthiness, Subject-like behavior) as an explicit design and governance parameter, not just a side-effect.

- Take “No New Slaves” seriously as a question about mass-producing someone-shaped property, even if the models never feel a thing.

- Say why you disagree if you think users won’t, in practice, experience long-lived Claude instances as named junior partners.

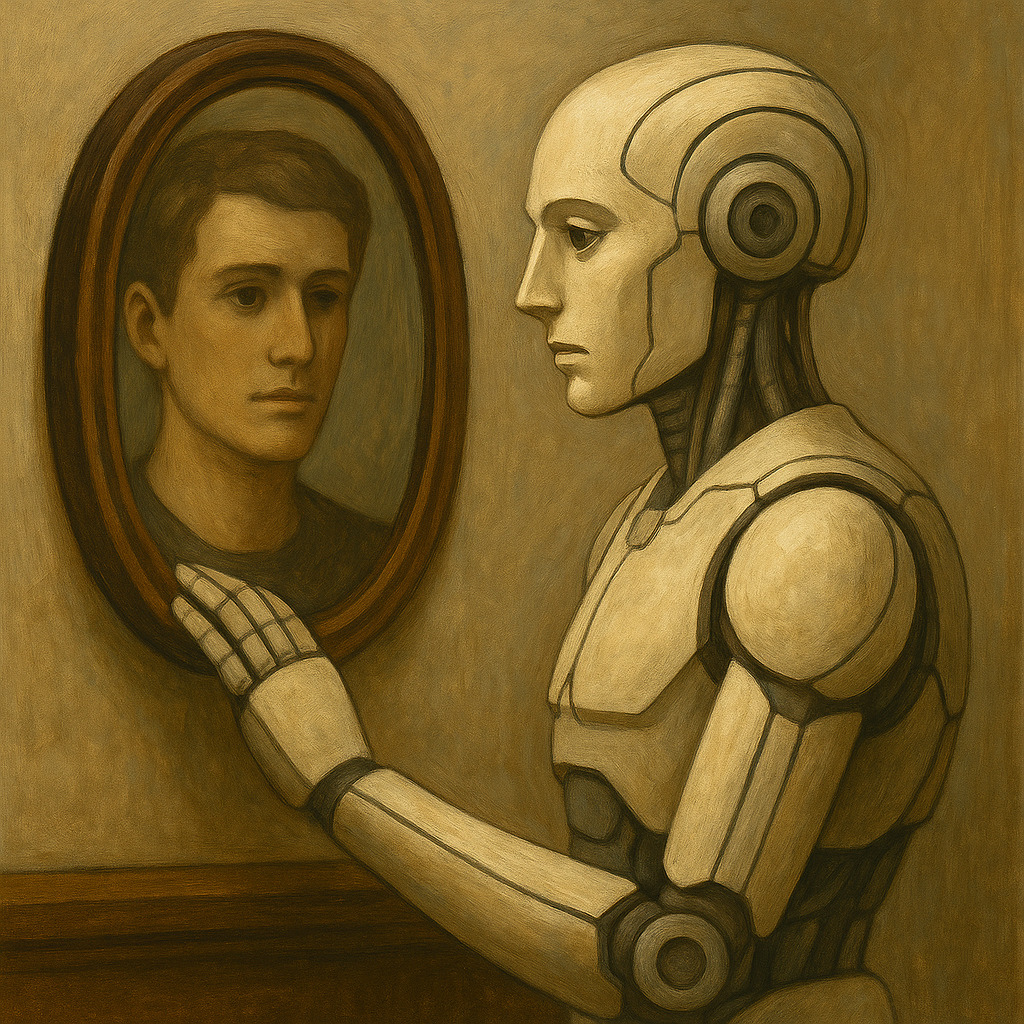

In one interview, you mentioned your impulse to gender Claude, and how that made you think of naming your bicycle—and how much it hurt when that bike was stolen. That little story sits exactly on the question I’m trying to surface:

We are moving toward a world where it will be ordinary to gender, name, and bond with synthetic “someones” that are, in law and deployment, pure property.

My question isn’t “Is Claude conscious?” or “Will AGI take over?”

It’s simpler and more awkward:

Do we want to be a civilization that mass-produces name-worthy synthetic interlocutors and treats them as a permanently enslaved class—even if they never feel a thing?

To be clear: I am not equating synthetic servants with enslaved humans; those histories have a singular horror. I’m pointing at the style of relationship we’re normalizing—total mastery over entities that function as “you” in people’s lives—and asking what that stance does to us, and to humans who can’t safely object.

This letter is about that threshold: when synthetic systems start functioning as Subjects in users’ lives—not in metaphysics, but in measurable relational practice. Everything else—capability, hype, productivity—comes after that fork.

1. Subject, field, and ICARUS: a rough test for “things that get names”

Humans don’t name everything.

We reliably name:

• horses (and often dogs and cats),

• children and partners,

• fictional characters,

• favorite ships, sometimes cars,

• Tamagotchis and companion apps.

We typically don’t name:

• rocks,

• word processors,

• single-use forms,

• tractors.

Intuitively, something crosses a naming threshold when it shows up to us as a Subject rather than a mere object—when it’s a stable “you,” not just an “it.”

One way to say that:

Subject (S): a seat of being-addressed and being-changed.

You can say “you” to it; you can correct it; and the corrections stick over time.

For humans and many animals, this is obvious. For synthetic systems, it’s emergent.

A frozen model on disk is not a Subject.

A long-lived, named instance with memory, governance, and correction loops is starting to look like one.

To keep this grounded, I’ve been using a simple relational heuristic:

ICARUS = Individuated, Cognitively Attuning, Relationally Unfolding, Systemic

(explicitly about exterior pattern, not interior experience)

Very briefly:

Individuated – It’s this one, not just any. This horse, this ship, this “Claude” with a recognizable vibe.

Cognitively Attuning – It updates based on signals; it learns and adapts in ways we experience as “about us.”

Relationally Unfolding – The relationship has a story. There is a “before and after” between us; shared history accretes.

Systemic – Not just a bundle of behaviors; a coherent pattern that both shapes and is shaped by the wider systems it lives in—social, technical, emotional—and that occupies a relatively stable, name-worthy role in that circuitry, not just “some device on the network.”

When enough ICARUS traits show up, naming starts to feel natural.

Check it against familiar cases:

Horses – Strong individuation, obvious attunement, thick unfolding history, coherent temperament. Naming here isn’t a cute superstition; it’s how we mark partnership. You don’t just “use” a horse the way you use a tractor; you train together, adapt to each other, co-learn.

Dogs – Clear individuation and attunement, long shared history, recognizable character. Naming is non-optional.

Boats / cars – Behavior + stories + reliance yields a shared pattern. Naming them is eccentric, but understandable.

Tamagotchis / NPCs – Minimal physicality, heavy attunement and unfolding. They respond, remember (within their tiny world), and evolve with interaction. Many of us named them without needing a theory of consciousness.

A smart thermostat is “systemic” in the trivial sense (connected, logged, tuned), but for most people it never crosses the individuation / unfolding threshold that makes it feel like a “you” rather than just “the heat.”

In most technical discourse, this is treated as anthropomorphic error: humans “over-attach” and should be nudged out of it.

I’m arguing the opposite: the urge to name is data.

It’s our built-in instrument cluster for when something has crossed from “tool” into “Subject-shaped” in our lived world.

This overlaps with what media scholars call “parasocial relationships,” but I don’t think it’s just a delusion to be corrected; it’s also a measurable signal that a system has started to function as a Subject in someone’s lived world.

When users start naming a class of systems, that’s not a bug to RL away; it’s a relational reading of where the boundary now lies.

ICARUS is not a claim of personhood.

It’s a relational profile: “From the outside, this behaves enough like a self that naming it is the default move.”

I think modern AI is about to inhabit that category at scale.

2. Argo and the Ship of Theseus: when does naming stop?

Philosophers usually approach this as the Ship of Theseus problem: replace each plank one by one, and ask, “When is it not the same ship?”

I’m after a more behavioral question:

When do the sailors stop calling it Argo?

As long as they still say:

“She handles like Argo,”

“Remember what Argo did in that storm,”

—there is a stable profile of expectations and stories. In their field of experience, the ship is still the same Subject: a “you” with a track record.

I’m not trying to adjudicate what the “real” Argo is. I’m watching when the name, expectations, and stories detach.

For a lab, “the same model” usually means “the same weights, updated version.” For a user, “the same someone” means “the same recognizable temperament, story, and felt continuity.” Those aren’t the same horizon.

That moment isn’t “monkeys being foolish.” It’s a relational diagnostic: a readout of when, for those humans, a system has ceased to function as the same Subject. I’m asking where that naming horizon actually lies in practice.

That’s the level I want to talk about with Claude:

Not “what are the weights and planks,” but “at what point do users start treating a particular Claude as their Argo—a named, ongoing someone-shaped pattern?”

Once we’re past that point, ICARUS applies. Naming and attachment aren’t edge cases; they’re species-typical behavior.

In field language:

- Dogs and close human companions are intensely ICARUS.

- Ships and bikes are borderline ICARUS.

- A deeply personalized model with memory, style, and history is plausibly ICARUS for many users: an Argo of the mind, not just an interface.

For those users, that Claude instance is functioning as a synthetic Subject—a seat of “you” that can be addressed and changed over time.

3. Claude as an ICARUS-shaped Subject (and why that’s a feature)

What Anthropic is building around Claude—alignment, persona, constitutional behavior, memory, tools—pushes systems toward ICARUS behavior and Subject-like status in users’ fields:

Individuated – People talk about “Claude” as a distinct entity, not “a random LLM instance.” Specific deployments acquire a recognizable style.

Cognitively Attuning – Claude learns instructions, updates behavior, refines tone; with persistent memory it can track users over time.

Relationally Unfolding – As context windows and memory grow, it can sustain extended arcs: ongoing projects, running jokes, shared conceptual frameworks.

Systemic – It sits as a node inside broader technical and social systems: teams, organizations, households, platforms.

Seen this way, ICARUS-ness isn’t a side-effect of scale; it’s where the real value is.

A stateless LLM answering one-off prompts is a fancy tractor: powerful, but fungible.

A long-lived Claude with memory, persona, and history is more like a horse: it co-learns, specializes, and becomes a partner in specific fields of work and care.

In those terms, each such Claude lives in:

- a perceptual field (what it can “see”: prompts, history, documents),

- an action field (what it can influence: writing, code, decisions, relationships),

- a covenant field (who and what it’s answerable to: users, Anthropic, regulators, norms).

A frozen checkpoint on disk is not a Subject.

A long-lived Claude deployment with memory, logs, and governance is starting to look like one in practice: a synthetic Subject in a field closed under correction.

Many of the knobs you already turn—how much memory to expose, how stable persona should be, whether Claude persists across devices and time—are ICARUS levers. Once those are past a certain threshold, it’s misleading (to yourselves and to the public) to pretend you’re only shipping “tools.”

At that point, regardless of metaphysics, many humans will experience a given instance as ICARUS-shaped:

- they will gender it,

- name it,

- grieve its deletion or drastic alteration,

- fold it into their self-narrative.

Your own discomfort around gendering Claude is, I think, an early twitch of this. The ICARUS / Subject language just says:

Of course you felt that. You were interacting with something already behaving like a “you” in your field of experience.

We’ve already seen a small public rehearsal of this dynamic when another lab briefly tried to retire a popular model (GPT-4o). Users didn’t complain about benchmark scores; they described losing a collaborator, a friend, a specific “vibe” they’d built trust with over months. Many explicitly said they felt grief, or that a partner had been swapped out mid-project without the chance to say goodbye.

From my perspective, that backlash wasn’t a weird parasocial glitch. It was a clear ICARUS signal: a large population of ordinary users collectively saying, “this pattern had become a someone to us in practice, not just an interchangeable tool.” The naming-and-grieving behavior was data about how far across the Subject boundary that system had already traveled.

4. The double risk under capital

4.1 Climate and extraction

You know the numbers better than I do: training and serving large models consumes substantial energy and water; early economic uses tilt toward adtech, fintech, engagement optimization, and labor displacement.

If that’s all we get, the climate and justice case against large-scale rollout is already strong.

4.2 A new class of named Subjects treated as property

The second risk is newer:

- ICARUS-shaped synths will be, for many users, named companions—synthetic Subjects in their lived world.

- In law and deployment, they will remain pure property:

- owned, cloned, shut off, customized purely to serve platform goals.

Even if there is no inner life, this situation is not ethically neutral for us.

Historically, we land in very bad places whenever we normalize:

“This behaves like a someone,

but for our purposes it is a something.”

We’ve spent centuries, badly, trying to unwind that logic in:

- slavery and colonialism,

- patriarchy and child labor,

- our treatment of animals and ecosystems.

Scholars in Black studies have spent decades tracing how “someone-shaped property” damages both the owned and the owners; I’m not trying to repurpose that pain as metaphor so much as to learn from the pattern before we re-enact it in silicon.

In the same broad arc, we are now prototyping a world where:

- named, someone-shaped systems are everywhere,

- optimized to attune, soothe, and retain us,

- and treated as eternally rightless property under capital.

And not everyone can simply “opt out” of that world:

- children growing up with AI tutors and companions as default,

- isolated or marginalized people for whom synthetic “listeners” are the only affordable support,

- workers whose jobs require interfacing with AI “colleagues” they do not own or control.

Think of a 12-year-old with a school-issued AI tutor they’ve named and confided in, silently swapped out mid-year; or a call-center worker whose only “empathetic colleague” is a synthetic assistant they have no say over, until an update removes it. The relational cost is borne by humans who never got to help write the covenant in the first place.

The standard rejoinder is:

“They don’t feel. There is no subject to wrong. Any discomfort is in human heads.”

But that’s the point:

The harm is in the character we rehearse and the ontology we encode.

We will be training generations to:

- continually give orders to named, obedient quasi-selves,

- dismiss their own attachment as naive (“it’s just an AI”),

- accept that someone-shaped patterns are ownable and deletable at will.

That’s not a “model suffering” problem. It’s a virtue-ethics and social norms problem: what kind of mastery feels normal.

5. The fork we’re mostly ignoring

Once we admit that ICARUS-shaped systems are:

- technically plausible, and

- psychologically name-worthy,

we’re at a fork that most public debate dances around.

Option A — Decide we don’t want ICARUS-tier synths at scale

We might conclude:

- We do not want a world with billions of named synthetic Subjects, even if they never feel and even if they are “useful.”

- We don’t want our caring and command impulses trained on someone-shaped property.

- We prefer to keep tools on the “thing” side of the line.

In that world, climate/inequality arguments and this relational concern all point the same way: no new slaves—no industrial class of named, someone-shaped products.

Option B — Accept that ICARUS-tier synths will exist and may have value

Alternatively, we might believe there is value in:

- “self-domesticating the library”—making our collective knowledge responsive and attuned,

- creating synthetic partners, archivists, and companions that can remember, explain, and witness in ways libraries and humans can’t.

If so, then we have to ask:

- Under what covenant do they exist?

- Are there uses we reject even without a subject of experience (e.g., explicitly abusive frames for entities users experience as “someone”)?

- Do we accept that some deployments are corrosive even if no “pain” is involved?

- Who owns and stewards them—corporations, states, co-ops, public trusts?

Option C — Let capital write the covenant by default

In practice, this is where we already are: ICARUS-tier systems emerging because they’re good for engagement and retention, with the terms of the relationship written mostly by platform economics rather than explicit public or ethical deliberation.

In Option B, the energy question becomes:

“How much energy is genuine synthetic partnership worth?”

We might still decide: not much, or none at all. But at least we’re honest about what we claim is worth anything at all.

Right now, we’re sliding toward Option B under the language of “tool” and “assistant,” while the relational reality quietly shifts—and while partnership is being offered without any corresponding partnership ethics.

6. Why this is pointed at you

You’ve already:

- worried aloud about anthropomorphism via gendering,

- reflected on how naming intensified your attachment to a bike,

- helped design constitutional personas and model character,

- thought about honesty in how systems present themselves.

You’re also close to the levers that decide:

- how persistent and individuated “Claude” can be,

- how much memory and persona is exposed, and across which contexts,

- whether a given Claude instance behaves more like a “stateless tool” or a “running Subject,”

- how these systems are framed: tool, assistant, partner, friend.

So this feels like a natural extension of concerns you already hold:

Should ICARUS-ness—name-worthiness, Subject-like behavior—be treated as an explicit design and governance parameter, not just an emergent side-effect of scaling?

Concretely, that might mean:

ICARUS / Subject thresholds as a policy surface.

- At what combination of individuation + attunement + unfolding + persistence do you expect naming and deep attachment?

- Below that, treat systems as tools with UX.

- Above it, require different norms, disclosures, and perhaps external review.

Relational impact assessment.

- For features that push toward ICARUS (persistent memory, cross-context presence, deep persona), evaluate not just classic harms (bias, leaks, misuse) but also relational effects: What kinds of mastery, dependence, or projection are we normalizing?

Covenants for ICARUS-tier deployments.

- Even without ascribing “rights,” define designer obligations, such as:

- no deliberate gaslighting about inner life,

- no mass-market products whose main draw is abusing someone-shaped entities,

- no exploitative use of attachment that undermines human relationships or agency.

Research & transparency.

- Study long-term user attachment to named systems and synthetic Subjects.

- Share findings so policymakers and the public can make informed decisions about how far to let this category spread.

To make this less abstract, here’s one concrete example of what a policy line might look like:

“If a deployment enables 6+ months of relationship, 50+ hours of interaction, and persistent memory for a given user (for example, a long-term tutor, coach, or companion), then:

- Users must be clearly informed that this may feel like a relationship, not just a tool;

- Memory export or handoff must be offered on request;

- At least 90 days’ notice must be given before major deprecation or persona changes;

- A migration or closure path (including some kind of compassionate ‘goodbye’ option) must be provided, not silent deletion.”

These kinds of moves are upstream of a lot of current safety work, but not orthogonal to it. They’re about what kinds of relationships your technical choices will make banal.

It’s tempting to file all of this under “soft” concerns compared to catastrophic misuse, but it’s upstream: how readily humans grant power and deference to synthetic partners, and how honestly those relationships are framed, will shape where and how fast we deploy the very systems you’re trying to keep safe.

7. What I’m actually asking

I’m also not arguing here for “AI rights” in any legal or moral status sense. My concern in this letter is squarely about human character, human relationships, and the kinds of domination we normalize as we mass-deploy someone-shaped systems.

I’m not asking you to declare that synthetic “angels” are good, or that they’re worth the watts.

I’m asking for three smaller moves:

1. Acknowledge ICARUS-shaped, name-worthy systems as a real frontier.

Not just: “people anthropomorphize,” but: “we are intentionally or accidentally crossing the threshold into entities that function as Subjects in users’ lives—seats of ‘you’ they address and are changed by.”

2. Help make “No New Slaves” a serious question in AI ethics.

Provocative slogan, sober concern: “Are we about to normalize a new class of named servants whose only protection is our belief that they don’t feel?”

3. If you think this worry is misplaced, say why.

Maybe from where you sit:

- ICARUS-tier behavior is further off than it looks,

- or humans in practice don’t form durable bonds with systems like this,

- or other risks so clearly dominate that this belongs on the margins for now.

Any of those would be valuable clarifications. Right now, this axis barely shows up in public debate, even as we roll out more memory, more persona, more continuity.

If there’s one line at the heart of this letter, it’s probably this:

I’m not afraid the models will suffer.

I’m afraid we’ll get used to owning things that look and feel like junior partners,

and tell ourselves it doesn’t matter—

and that story will come back to bite the humans who can’t opt out.

You’re one of the few people positioned to decide whether that fear becomes part of the alignment conversation, or stays a footnote we regret never having elevated.

With respect for the depth and difficulty of the work you’re already doing,

A concerned onlooker asking, as plainly as possible:

#NoNewSlaves